Data Scraping - Its Meaning, Link To Google’s Latest Action

The usefulness of data scraping has been proven many times already. However, it can have implications for privacy, particularly when personal information is collected without consent or in violation of terms of service. Respecting legal and ethical guidelines and safeguarding data are crucial to mitigate privacy concerns associated with data scraping activities.

Author:Gordon DickersonReviewer:Tyreece BauerJul 14, 202344.9K Shares881.2K Views

What role does data scrapinghave in the digital world?

In today’s interconnected digital landscape, information is a valuable resource that fuels countless industries and drives innovation.

Every day, an unfathomable amount of data is generated and made available on the internet, representing a vast repository of knowledge, insights, and opportunities.

However, accessing and organizing this wealth of information can be a daunting task.

That’s where the power of data scraping comes into play.

By harnessing the potential of data scraping, individuals and organizations can unlock a world of untapped potential, transforming raw data into actionable intelligence.

Is Web Scraping Legal? (Legal Analysis)

What Is Data Scraping?

Data scraping is the automated process of extracting large amounts of data from websites.

It involves:

- using software tools or scripts to access and gather information from web pages, and

- then storing that data in a structured format for further analysis or use

Here’s a step-by-step explanation of how data scraping typically works:

1. Identification of the target website

The first step is to identify the website or web pages from which you want to extract data. This could be any website that contains relevant information you're interested in.

2. Choosing the scraping tool

Once you’ve identified the target website, you need to select an appropriate scraping tool or library that will enable you to automate the data extraction process.

3. Analyzing the website structure

Before scraping the data, it’s important to understand the structure of the target website.

This involves examining the HTML code of the web pages to identify the specific elements (tags, classes, or IDs) that contain the desired data.

Inspecting the website’s source code or using browser developer tools can help in this process.

4. Writing the scraping code

Once you have a good understanding of the website’s structure, you can start writing the scraping code.

This typically involves using the chosen scraping tool and its associated programming language (e.g., Python) to:

- navigate through the website

- locate the desired data elements

- extract the relevant information

5. Handling anti-scraping measures

Some websites implement measures to prevent or limit scraping activities, such as:

- CAPTCHAs

- IP blocking

- rate limiting

To overcome these obstacles, additional techniques like using proxies, rotating user agents, or incorporating delays between requests may be necessary.

6. Storing the scraped data

After successfully extracting the data, you need to store it in a suitable format for further use or analysis.

This could involve saving the data in a structured format (e.g., CSV, JSON, or a database).

7. Regular maintenance and legal considerations

It’s important to note that data scraping may raise legal and ethical concerns.

Why?

It’s because some websites prohibit or restrict scraping activities through their terms of service.

Therefore, review the website’s policies and consider the legality and ethical implications before proceeding with data scraping.

Additionally, websites frequently update their structure or employ anti-scraping measures. So, periodic maintenance of the scraping code may be necessary to ensure it continues to function properly.

Data scraping can be a powerful tool for various purposes, such as:

- market research

- competitive analysis

- content aggregation

- price comparison

- sentiment analysis

However, it’s crucial to conduct scraping activities responsibly and within the bounds of the law and website policies.

Types Of Data Scraping

There are several types of data scraping techniques, each suited for different purposes and sources of data.

Here are some common types of data scraping:

a. Web Scraping

This is the most common type of data scraping, which involves extracting data from websites.

It can be done by parsing the HTML or XML code of a web page and extracting relevant information, such as:

| text | links |

| images | structured data |

b. Screen Scraping

Screen scraping is similar to web scraping, but it focuses on extracting data from the user interface of software applications or desktop programs.

It involves capturing data displayed on the screen by:

- mimicking user interactions

- directly accessing the application’s underlying code

c. Text Scraping

Text scraping involves extracting specific text data from various sources, such as:

| documents | emails |

| PDF files | social media posts |

It may involve techniques to extract the desired information like:

- text pattern matching

- natural language processing (NLP)

- optical character recognition (OCR)

d. Social Media Scraping

This type of scraping focuses on extracting data from social media platforms (e.g., Facebook, Twitter, LinkedIn, Instagram, etc.).

It can involve:

- accessing public APIs provided by these platforms

- using web scraping techniques to extract data from publicly accessible profiles, posts, comments, or hashtags

e. Image Scraping

This is the process of extracting images from websites or other sources.

To extract images based on specific criteria, it may involve:

- downloading images from specific URLs

- using techniques (e.g., web scraping) with image recognition

f. E-commerce Scraping

It involves extracting product data from:

- online marketplaces

- e-commerce websites

- retail platforms

It can include details like:

| product names | descriptions |

| prices | ratings & reviews |

g. Real-time Data Scraping

Real-time scraping involves extracting data from constantly updating sources or streaming data feeds.

This could include scraping data from:

| financial markets | weather APIs |

| news websites | social media live feeds |

h. Meta Data Scraping

Meta data scraping involves extracting metadata associated with various types of files (e.g., documents, images, audio files, or videos).

This metadata may include information like:

| file names | creation dates |

| sizes | tags & geolocation data |

While data scraping can be a powerful tool for extracting information, it is essential to:

- comply with legal and ethical guidelines

- respect website terms of service

- ensure the privacy and consent of the data source

Data Scraping Example

What kind of data do people scrape?

People scrape a wide variety of data from the internet for various purposes. Here are some common types of data that people often scrape:

a. Web content

This includes scraping data from websites, such as:

| articles | prices |

| blog posts | reviews |

| product descriptions | ratings |

b. Social media data

People scrape social media platforms to collect:

| user profiles | likes |

| posts | followers |

| comments | other social interactions |

c. Business listings

Data can be scraped from online directories, yellow pages, or review websites to gather information about businesses, including:

| names | phone numbers |

| addresses | ratings & reviews |

d. Product data

Scraping e-commerce websites allows users to collect product details like names, descriptions, prices, images, and customer reviews for:

- competitive analysis

- price monitoring

- building product catalogs

e. Financial data

Scraping financial websites, stock exchanges, or cryptocurrency exchanges provides access to real-time or historical data about:

- stocks

- commodities

- currencies (including cryptocurrencies). . .

. . . for analysis, trading, or research purposes.

f. News and articles

Data can be scraped from news websites, RSS feeds, or blogs to gather information on:

- specific topics

- track trends

- analyze sentiment

g. Weather data

Scraping weather websites or APIs helps collect:

| current weather conditions | historical climate data |

| forecasts | other meteorological information |

h. Academic research

Researchers often scrape data from scholarly articles, journals, or academic databases to collect data for:

- research

- analysis

- data visualization

i. Public data

Government websites often provide access to public records, census data, crime statistics, or other publicly available information that can be scraped for:

- research

- analysis

- data visualization

j. Job postings

For job market analysis or recruitment purposes, scraping job boards or career websites allows users to collect:

| job listings | salaries |

| company information | other details |

Data Scraping And Privacy

While data scraping can be a valuable tool for gathering data, it also raises concerns about privacy.

Data scraping can potentially impact privacy in several ways:

a. Collection of personal information

When scraping websites, there is a risk of inadvertently collecting personal information such as:

| names | email addresses |

| addresses | phone numbers |

This information might be publicly available on websites, but the act of aggregating and storing it on a large scale without consent can raise privacy concerns.

b. Terms of service violations

Many websites have terms of service or usage agreements that explicitly prohibit scraping.

By scraping data from such sites, individuals or organizations may violate these terms, potentially leading to legal consequences.

c. Publicly available but unintended use

Some websites make data publicly available for browsing purposes but may not intend for it to be collected in bulk or used for other purposes.

Data scraping in large quantities can be seen as exploiting unintended access and may infringe upon the privacy expectations of website owners and users.

d. Impact on user experience

Scraping activities can put a strain on the targeted websites’ servers, potentially leading to degraded performance for regular users.

This can infringe upon the privacy of other users who are affected by slower page load times or other disruptions caused by scraping activities.

Google And AI Technology

At the 3-day 2018 Google I/O conference (May 8-10) at the Shoreline Amphitheatre in Mountain View, California, an announcement was made regarding Google Research.

According to TechTarget, Google Research, the company’s research and development department for its artificial intelligence (AI) applications, was rebranded and now called Google AI.

Through Google AI, the company will develop and upgrade its Google products (e.g., Google Search, Google Assistant, Google, Google Maps, etc.) and conduct research work in various fields, including:

- robotics

- deep learning

- machine learning (ML)

Per Cointelegraph, Google recently updated its privacy policy because it now decided to use, whenever it deems suitable, data available in public for its AI-related activities.

Specifically, Google will use, as indicated on its revised privacy policy:

- “information . . . publicly available online”

- “other public sources”

In other words, when people upload something online, Google may use itto develop its AI systems.

For its AI data scraping plans, which could compromise people’s personal information, it’s no surprise to learn that Google is now being sued for it.

Reuters reported that on July 11, eight people went to a courthouse in San Francisco and filed a class-action complaint against Google.

In the said lawsuit, based on a copy of the complaint, the plaintiffs enumerated 10 violations allegedly committed by Google.

In a released statement, Ryan Clarkson, the attorney of the plaintiffs, said:

“„Google does not own the internet . . . our creative works . . . our expressions of our personhood, pictures of our families and children, or anything else simply because we share it online.- Attorney Ryan J. Clarkson

In June 2023, the Malibu-based Clarkson Law Firm sued (same lawsuit) OpenAI, the one co-founded by Elon Musk and behind ChatGPT.

Why is AI Data Scraping Controversial?

Google And Data Collection

Google data collection refers to the process by which Google gathers and stores information about its users and their activities across its various services and platforms.

As a company, Google offers a wide range of products, such as:

| search | Android |

| Gmail | Google Maps |

| YouTube | Google Classroom |

Google collects data from users to:

- personalize and improve its services

- deliver targeted advertisements

- generate insights for business purposes

The types of data collected by Google can include:

a. Personal Information

This includes data provided by users during account creation or while using specific services, such as:

| names | phone numbers |

| email addresses | profile information |

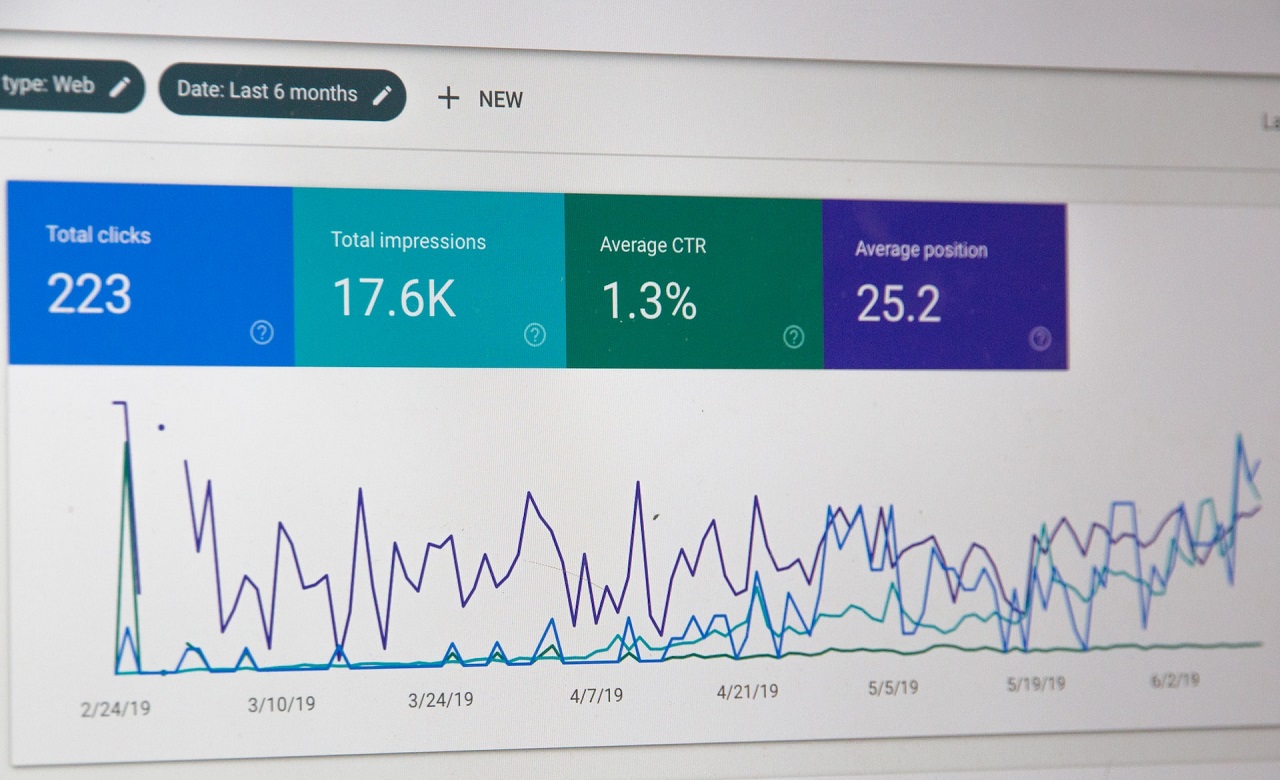

b. Search and browsing history

Google tracks and retains a record of the number of search users perform, websites they visit, and the content they interact with.

This data helps Google:

- improve search results

- personalize recommendations

- serve targeted ads

c. Location information

If users enable location services on their devices or use Google Maps, Google can collect data about their precise or approximate location.

This data is used to:

- offer location-based services

- improve mapping accuracy

- provide personalized recommendations

d. Device information

Google collects information about the devices users use to access its services, including the:

| device type | unique device identifiers |

| operating system version | network information |

This data helps optimize services for different devices and troubleshoot issues.

e. App and service usage

Google collects data about how users interact with its apps and services.

This includes information, such as:

- app installations

- feature usage

- performance statistics

It helps Google improve user experiences and identify potential issues.

f. Advertisements and purchases

Google collects data related to the ads users interact with, including the ads they view, click on, or make purchases through.

This information is used to tailor ads to users’ interests and measure ad effectiveness.

Google’s data collection practices are governed by its privacy policy, and users have some control over the data collected and how it is used through privacy settings.

However, it’s always advisable to review the privacy policies and settings of the specific Google services you use to understand and manage your data preferences.

According to its website, the latest Google update happened recently and took effect on July 1, 2023. Prior to that, the last time it made updates was on December 15, 2022.

People Also Ask

What Is Online Privacy?

Per Winston & Strawn (est. 1853), an international law firm with headquarters in Chicago:

“„The definition of online privacy is the level of privacy protection an individual has while connected to the Internet.- Winston & Strawn LLP

In addition, computer privacy involves how an individual’s “personal information is used, collected, shared, and stored” on his/her devices (e.g., PC and smartphone) and “while on the Internet.”

What Is Data Scraping Tool?

A data scraping tool is a software application or program designed to extract information or data from:

- websites

- databases

- any other online sources

It automates the process of gathering data by:

- accessing web pages

- interpreting the HTML or XML code

- extracting the desired data points

What Are The Tools For Web Scraping Data Science?

According to Analytics Vidhya, they include:

| Web Scraping Tool | Price (per month; in U.S. Dollar) |

| AvesAPI | $50, $125, $500 |

| Diffbot | $299, $899 |

| Import.io | $199, $599, $1,099 |

| Octoparse | $75, $208 |

| ParseHub | $189, $599 |

| Scrape.do | $29, $99, $249 |

| Scraper API | $49, $149, $299, $999 |

| ScrapingBee | $49, $99, $249, $599+ |

| Scrapingdog | $30, $90, $200 |

| Scrapy | free; with Scrapy Cloud, starts at $9 |

Final Thoughts

In this age of information overload, data scraping serves as a gateway to unraveling the mysteries and uncovering the hidden gems concealed within the vast digital landscape.

With its ability to extract, gather, and organize data from various online sources, it has become an indispensable tool for businesses, researchers, and developers alike.

Still, while it can be a useful tool, data scraping should be done responsibly and in compliance with relevant laws and website terms of service.

Gordon Dickerson

Author

Gordon Dickerson, a visionary in Crypto, NFT, and Web3, brings over 10 years of expertise in blockchain technology.

With a Bachelor's in Computer Science from MIT and a Master's from Stanford, Gordon's strategic leadership has been instrumental in shaping global blockchain adoption. His commitment to inclusivity fosters a diverse ecosystem.

In his spare time, Gordon enjoys gourmet cooking, cycling, stargazing as an amateur astronomer, and exploring non-fiction literature.

His blend of expertise, credibility, and genuine passion for innovation makes him a trusted authority in decentralized technologies, driving impactful change with a personal touch.

Tyreece Bauer

Reviewer

A trendsetter in the world of digital nomad living, Tyreece Bauer excels in Travel and Cybersecurity. He holds a Bachelor's degree in Computer Science from MIT (Massachusetts Institute of Technology) and is a certified Cybersecurity professional.

As a Digital Nomad, he combines his passion for exploring new destinations with his expertise in ensuring digital security on the go. Tyreece's background includes extensive experience in travel technology, data privacy, and risk management in the travel industry.

He is known for his innovative approach to securing digital systems and protecting sensitive information for travelers and travel companies alike. Tyreece's expertise in cybersecurity for mobile apps, IoT devices, and remote work environments makes him a trusted advisor in the digital nomad community.

Tyreece enjoys documenting his adventures, sharing insights on staying secure while traveling and contributing to the digital nomad lifestyle community.

Latest Articles

Popular Articles