Generative Model - What Are Its Categories For Brain Dynamics Study?

A generative model looks at how the data is spread out and tells how likely something will happen. For instance, models that predict the next word in a series are often generative because they can give a string of words a probability. Generative models are a large group of algorithms for machine learning that make predictions by modeling the joint distribution of P (y, x).

Author:Karan EmeryReviewer:Daniel JamesJan 09, 202337.6K Shares627.1K Views

A generative modellooks at how the data is spread out and tells how likely something will happen.

For instance, models that predict the next word in a series are often generative because they can give a string of words a probability.

Generative models are a large group of algorithms for machine learning that make predictions by modeling the joint distribution of P (y, x).

Because there are so many new ways to collect data and use computers, neuroscientists have had to adapt these tools to deal with ad hoc problems.

The generative model architecture class is a group of tools that are improving at recreating the dynamics of both brain parts and the brain.

A generative model could be a set of equations that describe how human patient signals change over time based on how the system works.

In general, generative models do better than black-box models when it comes to making inferences, but not when it comes to making essential predictions.

To use a generative model, you have to figure out more unknowns.

You have to estimate the likelihood of each class and the likelihood of an observation given a class.

These probabilities are used to figure out the joint probability, which can then be used to make predictions instead of the conditional probability.

When it comes to analyzing neuroscientific data, generative models have better properties.

Several hybrid generative models can be used to make models of brain dynamics that can be understood.

Lecture 13 | Generative Models

What Is Generative Modeling?

Generative modeling uses artificial intelligence (AI), statistics, and probability in applications to build a representation or abstraction of observable events or target variables that can be estimated from observations.

In unsupervised machine learning, generative modeling represents phenomena in data, allowing computers to grasp the current world.

This AI knowledge may be used to estimate various probabilities about a topic based on modeled data.

In unsupervised machine learning, generative modeling algorithms analyze large amounts of training data and reduce them to their digital essence.

These models are often run on neural networks and may learn to detect the data's inherent distinguishing qualities.

Neural networks then use these simplified core understandings of real-world data to model data that looks like or is the same as real-world data.

A generative model may be one that is trained on collections of real-world photographs in order to create comparable ones.

The model could take observations from a 200GB picture collection and compress them into 100MB of weights.

Weights may be seen as strengthening neuronal connections. An algorithm learns to create increasingly realistic photos as it is trained.

Generative Model Vs Discriminative Model

Generative modeling uses artificial intelligence (AI), statistics, and probability in applications to build a representation or abstraction of observable events or target variables that can be estimated from observations.

In unsupervised machine learning, generative modeling represents phenomena in data, allowing computers to grasp the current world.

This AI knowledge may be used to estimate various probabilities about a topic based on modeled data.

In unsupervised machine learning, generative modeling algorithms analyze large amounts of training data and reduce them to their digital essence.

These models are often run on neural networks and may learn to detect the data's inherent distinguishing qualities.

Neural networks then use these simplified core understandings of real-world data to model data that looks like or is the same as real-world data.

A generative model may be one that is trained on collections of real-world photographs in order to create comparable ones.

The model could take observations from a 200GB picture collection and compress them into 100MB of weights.

Weights may be seen as strengthening neuronal connections. An algorithm learns to create increasingly realistic photos as it is trained.

In contrast to discriminative modeling, generative modeling identifies existing data and may be used to categorize data.

Discriminative modeling identifies tags and arranges data, while generative modeling creates something.

In the above example, a generative model may be improved by a descriptive model, and vice versa; this is accomplished by the generative model attempting to deceive the discriminative model into thinking the produced pictures are genuine.

Both grow more skilled in their jobs as they get additional training.

When To Use Generative Model

Generative modeling uses AI, statistics, and probability to develop an abstraction or representation of a target variable estimated from observations.

Unsupervised machine learning relies on generative modeling to give computers a sense of the world by representing occurrences in data.

This AI expertise may be utilized to make probabilistic inferences from modeled data.

Unsupervised machine learning uses generative modeling methods to distill enormous volumes of training data into their digital essence.

Models built this way may be trained to recognize unique characteristics in the underlying data using neural networks.

In turn, these neural networks use these streamlined, fundamental understandings of real-world data to provide results that are indistinguishable from, if not more accurate than, those obtained using human experts.

One generative model learns from a database of real-world images and then uses that knowledge to generate new images with similar subject matter.

The model was able to compress the weights of 200GB of images into 100MB of data.

One possible interpretation of more heft is a strengthening of neural synapses. As it is educated, an algorithm may produce more lifelike images.

Generative Model Categories

Generative models are classified into three types of modeling assumptions and aims.

Biophysical Model Category

Biophysical models accurately describe biological assumptions and constraints.

Due to the large number of parts and the real complexity of the systems they show, biophysical models can range from very small to very big.

Due to computational restrictions, large-scale models are often accompanied by increasing degrees of simplification.

The Blue Brain Project uses this kind of modeling.

Synaptic Level Model

Proteins are the smallest interacting components of the nervous system.

Gene expression maps and atlases can be used to figure out how these parts of the brain circuit work.

These maps include the geographical distribution of gene expression patterns, neuronal connections, and other large-scale dynamics, such as dynamic connectivity based on neurogenetic profiles.

Some of these models have aided in the development of computational neuroscience.

Much study has been undertaken on the relationship between cellular and intracellular activities and brain dynamics.

Intracellular processes and interaction models may produce realistic responses in both small and large dimensions.

Basic Neuronal Biophysics

The dynamics seem to be driven by inter-neuron communication. The release of action potentials is primarily responsible for information transfer.

The famous Hodgkin-Huxley equations were the first to explain the process of ion transport.

Other frameworks focus on simulating the biophysics of a population of neurons.

The "multicompartment model," which is a dendritic compartment model, can be used to imitate how multiple ion channels excite the cell.

People are still working on making models of many very accurate neurons, while other frameworks focus on modeling the biology of a set of neurons.

Models For Populations

The first whole-cortex modeling benchmark was a six-layered neocortex simulation of a cat's neocortex, which served as the foundation for programs such as the Blue Brain project.

The first simulated subject was a juvenile rat brain fragment, 2 mm in height and 210 m in radius.

Both technical restrictions and the purpose of the study should be considered. A brain takes 20 watts of power, but a supercomputer requires megawatts.

IBM TrueNorth chips comprise 4,096 neurosynaptic cores, equating to 1 million digital neurons and 256 million synapses. NeuCube is a plastic 3D SNN that learns population connections from various STBD modulations.

Phenomenological Models Categories

Statistical physics and complex systems have well-developed methods that can be used to simulate the brain.

This is because neuronal populations and current physical models behave in similar ways.

Some dynamical priors are given in such models but not by fundamental biological assumptions.

For example, the Kuramoto oscillator model tries to find the parameters that best match how the system works.

These metrics describe the quality of the phenomenon (like how strong the synchronization is), but they don't say much about the organism's structure.

Problem Formulation, Data Collection, And Tool Development

The goal of phenomenological models is to quantify the evolution of a state space based on the state variables of the system.

If you can find two population elements that describe the state of a neural ensemble, then the state space is made up of all the likely pairings.

The state of this ensemble may always be expressed as a 2-D vector at any given moment.

When such a sparse state space is found, it is possible to predict what will happen to the neural ensemble in the next time step.

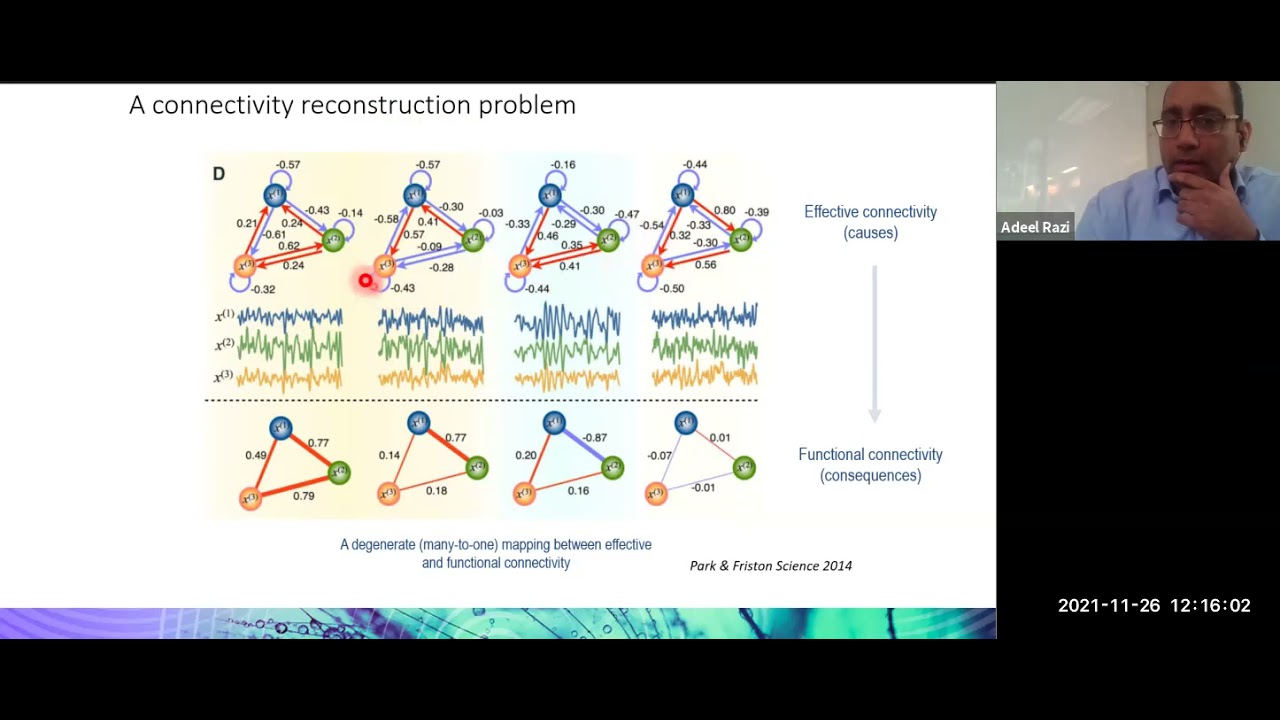

To create models that make biological sense and can be explained, you must first understand how functionally independent brain areas interact.

Anatomical connections are data-driven, while functional connections are based on statistical correlations in data space.

Dynamical Causal Modeling is a method for discovering the optimal causal connection parameters that best fit the observed data.

Connectivity matrices aid the information processing pipeline.

Since most research has focused on mapping these networks onto the resting-state network, many structure-function questions still haven't been answered.

Nonlinear Dynamical Models And Statistical Inspiration

In addition to network science, another way to understand brain data is through well-known methods for describing how physical systems change over time.

These systems are well known as spin-glass and other kinds of connected oscillators.

The Kuramoto model is extensively used in physics to investigate synchronization phenomena.

It applies to neurological systems because it enables a phase reduction method.

Kuramoto's scope may also be broadened to encompass anatomical and functional links. Many multistability issues relating to cognitive maladaptation remain unresolved.

For deep learning challenges involving space and time, dynamical systems models might be a good method to add additional information to the data.

Computational Agnostic Model Category

Data-driven algorithms may learn to replicate behavior with minimum prior knowledge if given "enough" data. Such methods include several self-supervised strategies.

The term "adequate" captures the major limitations of these strategies.

Such approaches often require unrealistically large datasets and have inherent biases.

Also, how these models show how the system or phenomenon works may be very different from how it works.

Existing Models Of Learning

Over the past decade, research has swiftly progressed from single neurons to neural networks.

There are big problems with simple models that link a single neuron's state to a higher activity level.

Independent Component Analysis was used to tackle the Blind Source Separation problem.

Each data sample is a collection of the states of many sources, but the attributes of these sources are the hidden variable.

Rather than minimizing variance, Independent Component Analysis determines the relevant source by mapping the data onto the feature space.

Long-term memory continues to surpass common sequential models such as transformers.

The computation, data, and optimization complexity required are significant biologically developed liquid state machine beats other artificial neural networks on stated accuracy measures, including lengthy short-term memory reduced when the nonlinear activation function is removed.

A biologically developed liquid state machine beats other artificial neural networks on stated accuracy measures, including long short-term memory.

A fluid state machine does better than recurrent neural networks with granular layers that mimic the structure and wiring of the cerebellum and cerebral cortex.

There is no easy way to determine the appropriate architecture and hyperparameters for a given workload.

Two new attention models have been made to deal with these problems: transformers and recurrent independent processes.

Variational autoencoders are a new class of machine learning models that have recently shown cutting-edge performance in sequence modeling applications such as natural language processing.

Foundation models are often generated using transformers.

Hybrid Techniques For Scientific Machine Learning

For a well-observed system with unclear dynamics, generic function approximators that can identify data dynamics without prior knowledge of the system might be the best solution.

Although particular neural Ordinary Differential Equation techniques for fMRI and EEG data have been deployed, additional deep architectures such as GOKU-net and latent Ordinary Differential Equations are still in the early phases of research.

The main idea is that a set of equations that describe the first-order rate of change can be used to figure out the multidimensional governingregularly or irregular principles.

These models are predicated on the premise that latent variables may represent the dynamics of seen data.

Data is intended to be sampled from a continuous stream of data regularly or irregularly; however, they are based on the dynamics provided by a continually changing hidden state.

Machine learning methods are already widely used for brain state classification and regression.

They do, however, have much more promise than black-box, data-intensive classifiers.

They can also be used to test biophysical and system-level assumptions and as generative models.

Adeel Razi: Generative models of brain function: Inference, networks, and mechanisms

People Also Ask

Is Gaussian Process A Generative Model?

Yes, Gaussian Regression is a generative model.

What Are Some Real World Use Cases Of Generative Modeling?

- Text-to-Image Conversion.

- Frontal View Generation of the Face

- Create New Human Pose

- Emojis to photos

- Accept Aging.

- Excellent Resolution.

- Inpainting on a photograph.

- Translation of clothing

How Do You Train Generative Models?

To train a generative model, we first gather a vast quantity of data in some domain (e.g., millions of photos, phrases, sounds, etc.) and then train a model to produce similar data.

The idea behind this method comes from a well-known quote by Richard Feynman: "What I can't build, I don't understand."

What Is The Advantage Of Generative Modelling?

A generative model learns each class independently and only examines input with matchable labels.

The model does not prioritize inter-model discrimination and does not analyze the data as a whole.

As a result, learning is streamlined, and algorithms run quicker.

Is Generative Model Unsupervised?

Unsupervised learning of valuable features and representations from natural photos is made possible by generative models.

If the learned models are sparse, the taught features and representations may be more transparent and easy to understand than the discriminative models.

Conclusion

With certain limits, generative models of brain dynamics are widely employed in biophysics, complex systems, and artificial intelligence.

The researcher must adapt the model size and abstraction based on the issue.

While there is no perfect formula, hybrid solutions might simultaneously address explainability, interpretability, plausibility, and generalizability.

Karan Emery

Author

Karan Emery, an accomplished researcher and leader in health sciences, biotechnology, and pharmaceuticals, brings over two decades of experience to the table. Holding a Ph.D. in Pharmaceutical Sciences from Stanford University, Karan's credentials underscore her authority in the field.

With a track record of groundbreaking research and numerous peer-reviewed publications in prestigious journals, Karan's expertise is widely recognized in the scientific community.

Her writing style is characterized by its clarity and meticulous attention to detail, making complex scientific concepts accessible to a broad audience. Apart from her professional endeavors, Karan enjoys cooking, learning about different cultures and languages, watching documentaries, and visiting historical landmarks.

Committed to advancing knowledge and improving health outcomes, Karan Emery continues to make significant contributions to the fields of health, biotechnology, and pharmaceuticals.

Daniel James

Reviewer

Daniel James is a distinguished gerontologist, author, and professional coach known for his expertise in health and aging.

With degrees from Georgia Tech and UCLA, including a diploma in gerontology from the University of Boston, Daniel brings over 15 years of experience to his work.

His credentials also include a Professional Coaching Certification, enhancing his credibility in personal development and well-being.

In his free time, Daniel is an avid runner and tennis player, passionate about fitness, wellness, and staying active.

His commitment to improving lives through health education and coaching reflects his passion and dedication in both professional and personal endeavors.

Latest Articles

Popular Articles