Optimize Your Workflow - Streamline Your Data Analysis With A Web Page To Excel Practical Guide

Unlock hidden insights from web pages by converting them into Excel spreadsheets. Our practical web page to excel guide empowers researchers, marketers, and data analysts to make informed decisions.

Author:Tyreece BauerReviewer:Gordon DickersonJan 29, 20248.2K Shares109.5K Views

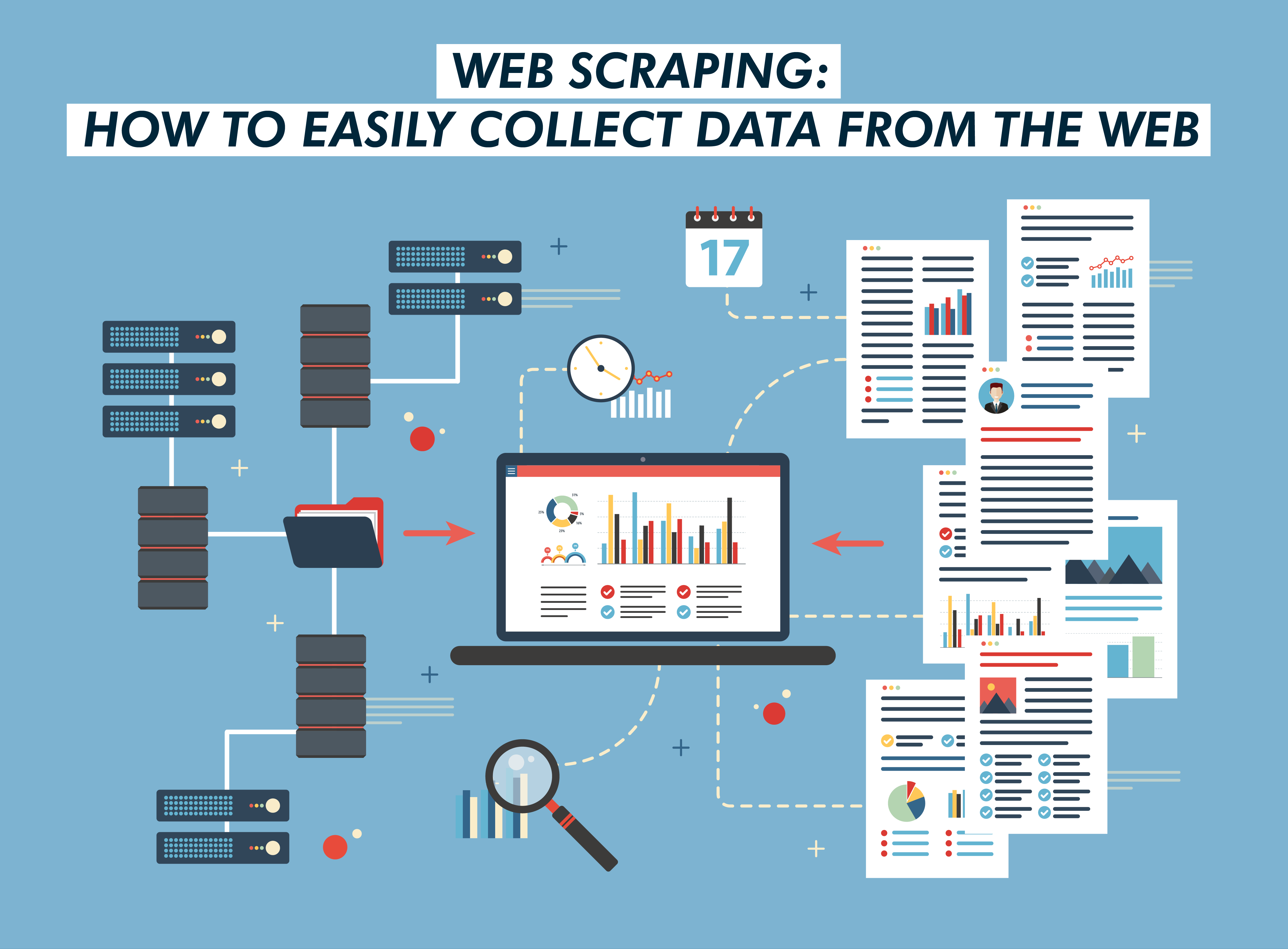

In a data-driven world, researchers, marketers, and data analysts are constantly seeking efficient methods to extract and analyze valuable insights from vast amounts of information. The process of manually extracting data from web page to Excelspreadsheets can be time-consuming and prone to errors. This is where web scraping emerges as a powerful tool for streamlining data analysis and optimizing workflows.

Web scraping, the technique of extracting data from websites using software, enables the automated collection of structured information from web pages. By leveraging web scraping tools, users can seamlessly transfer data from various online sources into Excel spreadsheets, eliminating the need for manual data entry and reducing the risk of human error. This automated approach significantly enhances data collection efficiency, allowing analysts to focus on extracting meaningful insights rather than spending time on tedious data-gathering tasks.

Introduction To Web Data Extraction

In the age of information overload, the ability to effectively extract and analyze data from web pages has become an essential skill for researchers, marketers, and data analysts. Web data extraction, also known as web scraping, is the process of collecting structured information from websites using automated software tools. This technique has revolutionized data acquisition by enabling users to gather vast amounts of information from various online sources, transforming web pages into rich data repositories.

Understanding The Basics Of Data Extraction From Web Pages

At its core, web data extraction involves parsing HTML code, the building blocks of web pages, to identify and extract specific data elements. This process typically involves several steps:

- Data Extraction -Extracting the identified data elements and storing them in a structured format, such as CSV, JSON, or Excel spreadsheets.

- HTML Parsing -Employing software tools to parse the HTML code of each web page and identify the targeted data elements.

- URL Acquisition -Gathering a list of URLs or web pages containing the desired data.

- Target Identification -Defining the specific data elements to be extracted, such as product details, news articles, or financial information.

The Importance Of Streamlining Data Analysis In Modern Workflows

In today's data-driven world, the ability to streamline data analysis is crucial for organizations across various industries. Web data extraction plays a pivotal role in achieving this goal by enabling efficient data collection and transformation.

- Competitive Advantage -By leveraging web data extraction, organizations can gain a competitive advantage by accessing and analyzing data that is not readily available through traditional methods. This can lead to better decision-making, improved marketing strategies, and enhanced product development.

- Scalability and Cost-effectiveness -Web scraping tools are highly scalable, allowing organizations to extract data from large volumes of web pages efficiently. This scalability is particularly beneficial for data-intensive projects, such as market research and competitive analysis.

- Real-time Data Analysis -Web scraping can be used to collect and analyze data in real-time, enabling organizations to make informed decisions based on the latest available information. This real-time insight is particularly valuable in industries where market conditions change rapidly, such as finance and e-commerce.

- Data Enrichment and Integration -Web scraping enables the integration of data from various online sources, enriching data sets and providing a more comprehensive view of the target subject. This integrated data can be used to identify patterns, trends, and correlations that would not be apparent from isolated data sources.

- Automated Data Collection -Web scraping automates the process of gathering data from web pages, eliminating the need for manual data entry and reducing the risk of human error. This significantly improves data collection efficiency, allowing analysts to focus on extracting meaningful insights rather than spending time on tedious data-gathering tasks.

Setting Up Your Tools For Data Extraction

In the realm of data analysis, efficiency reigns supreme. With the ever-increasing volume of data available, researchers, marketers, and data analysts alike are constantly seeking innovative methods to streamline their workflows and extract valuable insights. One such technique that has revolutionized data collection is web scraping, the process of extracting structured information from websites using software.

To embark on this data-extraction journey, it is crucial to equip yourself with the right tools and prepare your Excel environment for effective data handling. This comprehensive guide will delve into the intricacies of selecting the most suitable web scraping tools and optimizing Excel for seamless data management.

Choosing The Right Web Scraping Tools - A Gateway To Efficient Data Collection

The first step in your web scraping endeavor is to identify and employ the tools that best align with your specific data extraction needs. A plethora of web scraping tools exist, each with its unique strengths and capabilities. To make an informed decision, consider the following factors:

1. Level of Expertise -If you're a novice in the world of web scraping, consider user-friendly tools with intuitive interfaces and graphical user interfaces (GUIs). These tools often require minimal coding knowledge, making them ideal for beginners.

2. Data Complexity -Assess the complexity of the data you intend to extract. For simple data structures, basic web scraping tools may suffice. However, for more intricate data or websites with dynamic content, consider employing advanced tools that offer more sophisticated data extraction capabilities.

3. Automation Requirements -Determine your automation needs. If you require regular data extraction from multiple websites, opt for tools with robust automation capabilities that can be integrated into your workflow.

4. Cost Considerations -Evaluate the pricing models of different tools and select one that aligns with your budget. Consider options such as free open-source tools, subscription-based tools, or tools with tiered pricing plans based on usage.

Preparing Excel For Efficient Data Handling - A Foundation For Seamless Data Management

Once you've armed yourself with the right web scraping tools, it's time to optimize your Excel environment to handle the incoming data effectively. Here are some key steps to ensure a smooth data management process:

1. Data Structure Considerations -Before importing data, consider the structure you want it to take in Excel. This will help you organize the data effectively and facilitate further analysis.

2. Data Cleaning and Transformation -Anticipate the need for data cleaning and transformation. Web scraping tools may extract data in raw formats that require cleaning and transformation to make it usable for analysis.

3. Data Visualization Techniques -Familiarize yourself with Excel's data visualization tools, such as charts, graphs, and pivot tables. These tools will help you visualize trends, patterns, and relationships within the data.

4. Macros and Automation -Explore Excel's macro capabilities to automate repetitive tasks, such as data manipulation and formatting. This can save time and effort in the long run.

Step-by-Step Guide To Extracting Data - Identifying Key Data Points And Techniques For Efficient Web Scraping

In today's data-driven world, businesses and organizations are increasingly relying on data analytics to make informed decisions, drive strategic initiatives, and gain a competitive edge. However, manually extracting data from web pages can be a time-consuming and error-prone process. This is where web scraping emerges as an invaluable tool for streamlining data collection and enhancing data analysis capabilities.

Step 1 - Identifying Key Data Points on Web Pages

Before embarking on the web scraping process, it is crucial to clearly define the data points of interest. This involves understanding the specific information required for analysis and identifying the corresponding HTML elements on the web pages. Carefully scrutinize the web pages to locate the HTML tags that contain the desired data, such as table elements (<table>, <tr>, <td>), list elements (<ul>, <li>), or paragraph tags (<p>).

Step 2 - Selecting an Appropriate Web Scraping Tool

A variety of web scraping tools are available, each with its own strengths and limitations. Popular options include Python libraries like Beautiful Soup and Scrapy, as well as dedicated web scraping software like Octoparse and ParseHub. The choice of tool depends on the complexity of the web pages, the programming skills of the user, and the desired level of automation.

Step 3 - Writing Web Scraping Scripts

Once the target data points and web scraping tool have been selected, it is time to write the scraping scripts. These scripts instruct the tool to navigate the web pages, locate the desired HTML elements, and extract the relevant data. The specific syntax and structure of the scripts depend on the chosen tool.

Step 4 - Handling HTML Irregularities and JavaScript Rendering

Web pages can present various challenges for web scraping, such as dynamic content loaded through JavaScript or irregular HTML structures. To overcome these challenges, web scraping tools often employ techniques like JavaScript rendering emulation and regular expressions to extract data effectively.

Step 5 - Data Cleaning and Processing

The extracted data may contain unwanted elements, such as HTML tags, formatting characters, or inconsistencies. Data cleaning involves removing these impurities and transforming the data into a structured format suitable for analysis.

Step 6 - Storing and Managing Extracted Data

The extracted and cleaned data can be stored in various formats, such as comma-separated values (CSV) files, Excel spreadsheets, or databases. Proper organization and metadata labeling are essential for efficient data management.

Techniques For Efficient Web Scraping

To maximize the efficiency of web scraping, consider these techniques:

• Target Specific URLs -Instead of scraping entire websites, focus on specific URLs that contain the desired data.

• Respect Robots.txt -Adhere to the robots.txt file to avoid overloading websites and potential bans.

• Implement Polite Scraping -Spread out scraping requests over time and avoid overwhelming servers.

• Handle Errors Gracefully -Implement error handling mechanisms to deal with unexpected conditions.

• Monitor Scraper Performance -Regularly review and optimize scraping scripts for efficiency.

Automating The Data Extraction Process

In the realm of data analysis, efficiency and accuracy are paramount. As data volumes continue to grow exponentially, manual data extraction methods have become increasingly impractical and error-prone. To address these challenges, web scraping has emerged as a transformative tool, enabling the automated extraction of structured data from websites. By harnessing the power of web scraping tools, users can streamline their data collection processes, minimize human error, and unlock a wealth of analytical insights.

Implementing Automation Scripts

The cornerstone of web scraping automation lies in the development and execution of automation scripts. These scripts, typically written in programming languages like Python or R, instruct web scraping software on how to navigate websites, identify relevant data elements and extract the desired information. Automation scripts can be customized to target specific data sources and extract precise data sets, ensuring that only the relevant information is collected.

To implement automation scripts effectively, users must first identify the target websites and the specific data elements they wish to extract. Once these parameters are established, the automation script can be designed to navigate to the target pages, locate the desired data elements, and extract the information in a structured format. For instance, an automation script could be designed to extract product information from an e-commerce website, including product descriptions, prices, and customer reviews.

Overcoming Common Challenges In Web-to-Excel Data Transfer

Web-to-Excel data transfer, the process of extracting data from web pages and converting it into Excel spreadsheets, has become an indispensable tool for researchers, marketers, and data analysts alike. However, this process can be fraught with challenges that can hinder data accuracy and reliability. In this comprehensive guide, we'll delve into the common challenges faced in web-to-Excel data transfer and explore effective strategies to overcome them.

Troubleshooting Common Data Extraction Issues

- Handling Large Volumes of Data -Extracting large volumes of data from web pages can strain resources and lead to performance issues. To optimize data extraction for large datasets, employ asynchronous data extraction techniques and utilize cloud-based web scraping services.

- CAPTCHA and Anti-Bot Measures -Websites may implement CAPTCHA or anti-bot measures to prevent automated data extraction. To circumvent these restrictions, consider using proxy servers or rotating IP addresses to disguise your scraping activities.

- Dynamic or JavaScript-Generated Data -Websites often employ dynamic content or JavaScript-generated data that cannot be directly extracted using standard web scraping techniques. To overcome this obstacle, consider using browser automation tools that can interact with dynamic elements and render JavaScript content before extraction.

- Inconsistent or Missing Data -Web pages often contain inconsistent or missing data due to variations in data formatting, coding errors, or website updates. To address this challenge, utilize web scraping tools that can handle diverse data structures and identify patterns in incomplete data sets.

Ensuring Data Accuracy And Reliability

- Data Quality Monitoring -Establish data quality monitoring processes to continuously assess data accuracy, consistency, and completeness. Implement data quality checks to identify and rectify any deviations from established data quality standards.

- Data Provenance Tracking -Maintain a record of data sources and extraction timestamps to ensure data traceability and facilitate future data audits.

- Data Normalization and Transformation -Web data may be structured differently across sources. Implement data normalization techniques to standardize data formats and structures, facilitating consistent analysis and comparison.

- Data Deduplication and Merging -Web scraping may result in duplicate data from various sources. Employ data deduplication techniques to identify and remove duplicates, ensuring data integrity and consistency.

- Data Validation and Cleaning -Once data is extracted, it's crucial to validate its accuracy and consistency. Implement data validation rules to identify and correct errors, such as missing values, data type inconsistencies, and invalid entries.

Frequently Ask Questions - Web Page To Excel

Can I Convert A Web Page To Excel?

If you want to instantly scrap webpage information to Excel, you can try a no-code tool like Nanonets website scraper. This free web scraping tool can instantly scrape website data and convert it into an Excel format.

How Do I Show A Web Page In Excel?

Select Data > Get & Transform > From Web. Press CTRL+V to paste the URL into the text box, and then select OK. In the Navigator pane, under Display Options, select the Results table.

What Is An Excel To Website?

Excel for the Web (formerly Excel Web App) extends your Microsoft Excel experience to the web browser, where you can work with workbooks directly on the website where the workbook is stored.

Conclusion

Web-to-Excel data transfer has revolutionized the way researchers, marketers, and data analysts handle and analyze web data. By leveraging web scraping techniques, organizations can seamlessly extract valuable insights from vast amounts of online information, streamlining data analysis workflows and enhancing decision-making processes.

By overcoming common challenges and implementing robust data quality assurance practices, organizations can ensure the accuracy, reliability, and integrity of the data they extract, empowering them to make informed decisions, drive data-driven strategies, and achieve their objectives. Embrace the power of web-to-Excel data transfer and unlock the potential of web data to transform your data analysis practices and drive success.

Jump to

Introduction To Web Data Extraction

Setting Up Your Tools For Data Extraction

Step-by-Step Guide To Extracting Data - Identifying Key Data Points And Techniques For Efficient Web Scraping

Automating The Data Extraction Process

Overcoming Common Challenges In Web-to-Excel Data Transfer

Frequently Ask Questions - Web Page To Excel

Conclusion

Tyreece Bauer

Author

A trendsetter in the world of digital nomad living, Tyreece Bauer excels in Travel and Cybersecurity. He holds a Bachelor's degree in Computer Science from MIT (Massachusetts Institute of Technology) and is a certified Cybersecurity professional.

As a Digital Nomad, he combines his passion for exploring new destinations with his expertise in ensuring digital security on the go. Tyreece's background includes extensive experience in travel technology, data privacy, and risk management in the travel industry.

He is known for his innovative approach to securing digital systems and protecting sensitive information for travelers and travel companies alike. Tyreece's expertise in cybersecurity for mobile apps, IoT devices, and remote work environments makes him a trusted advisor in the digital nomad community.

Tyreece enjoys documenting his adventures, sharing insights on staying secure while traveling and contributing to the digital nomad lifestyle community.

Gordon Dickerson

Reviewer

Gordon Dickerson, a visionary in Crypto, NFT, and Web3, brings over 10 years of expertise in blockchain technology.

With a Bachelor's in Computer Science from MIT and a Master's from Stanford, Gordon's strategic leadership has been instrumental in shaping global blockchain adoption. His commitment to inclusivity fosters a diverse ecosystem.

In his spare time, Gordon enjoys gourmet cooking, cycling, stargazing as an amateur astronomer, and exploring non-fiction literature.

His blend of expertise, credibility, and genuine passion for innovation makes him a trusted authority in decentralized technologies, driving impactful change with a personal touch.

Latest Articles

Popular Articles